Apple on Tuesday unveiled a comprehensive suite of new accessibility features set to launch later this year across iPhone, iPad, Mac, Apple Watch, and Apple Vision Pro. The announcement — made ahead of Global Accessibility Awareness Day — reinforces Apple's long-standing commitment to making its devices accessible to everyone, including those with visual, auditory, cognitive, and mobility impairments.

What's new

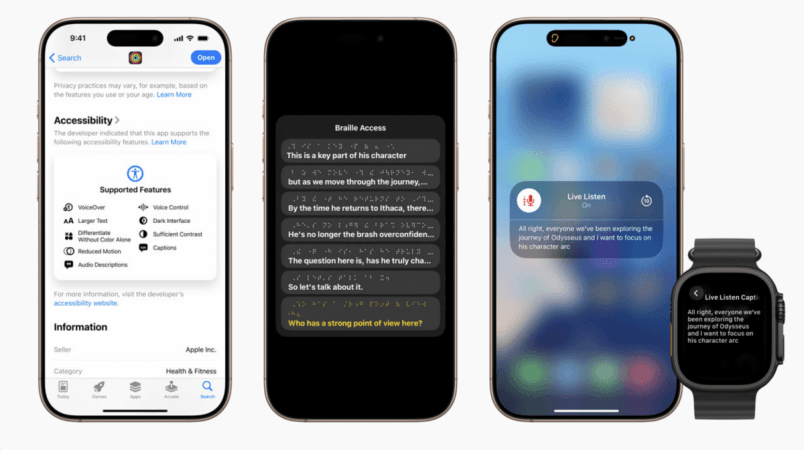

Among the most talked-about features is the introduction of Accessibility Nutrition Labels on the App Store, a new section on app pages that will let users instantly identify supported accessibility features — such as VoiceOver, Captions, and Reduced Motion — before downloading an app. This is a game-changer for millions of users who rely on such tools, making accessibility more transparent and user-informed.

Also debuting is Magnifier for Mac, a long-requested feature for low-vision users that turns the Mac into a powerful zoom and object-detection tool, mirroring its iOS counterpart. With features like Desk View and Continuity Camera integration, users can customize contrast, color filters, and even perspectives — making physical surroundings easier to navigate.

For users reliant on braille, Apple introduced Braille Access — a full-featured note-taking experience on iPhone, iPad, Mac, and Vision Pro. It allows users to open apps, take notes in Nemeth Braille, read BRF files, and even transcribe conversations in real-time on braille displays. This is Apple's clearest signal yet that it intends to make braille a mainstream digital tool.

Another notable addition is Accessibility Reader, a new systemwide reading mode designed for those with dyslexia or low vision. It provides granular control over fonts, spacing, colors, and supports Spoken Content — transforming the reading experience across apps and physical text.

Apple Watch is also getting a meaningful upgrade. Live Listen, which turns the iPhone into a remote mic, will now support Live Captions viewable directly on Apple Watch. This allows users to read real-time transcriptions of what their iPhone hears — ideal for meetings, classrooms, or conversations from across the room.

Apple Vision Pro Goes All In

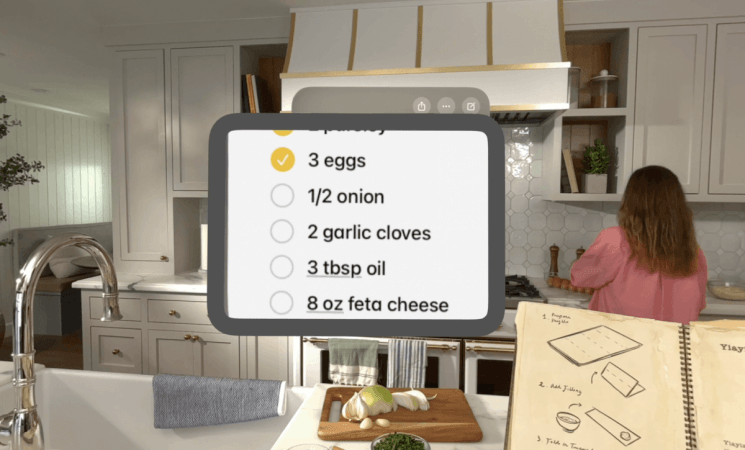

With updates to visionOS, Apple Vision Pro's accessibility potential expands dramatically. A new Zoom feature magnifies the user's surroundings via the headset's camera system. Meanwhile, Live Recognition uses on-device machine learning to describe environments and read documents, and a new developer API will allow approved apps to provide live visual interpretation support — further enhancing hands-free experiences for blind and low-vision users.

There's more

Other significant enhancements include:

- Personal Voice now generates a custom voice in less than a minute using just 10 phrases.

- Eye and Head Tracking improvements simplify navigation and typing for users with motor impairments.

- Vehicle Motion Cues come to Mac, reducing motion sickness.

- Sound Recognition now detects when someone calls the user's name.

- CarPlay adds support for Large Text and enhanced sound notifications like baby cries.

- Music Haptics becomes more customizable with detailed vibration feedback for the hearing-impaired.

In a landmark move, Apple is also adding support for Brain-Computer Interfaces (BCIs) via Switch Control — enabling individuals with severe mobility disabilities to interact with devices through thought-controlled technology.

"At Apple, accessibility is part of our DNA," said CEO Tim Cook. "Making technology for everyone is a priority, and we're proud of these innovations that empower people to explore, learn, and connect."