"Technology has forever changed the world we live in." - James Comey

Now, it's about to change the reality, as we know it. Technology has the capability of making it all happen; it being all the futuristic good and evil that we see in the movies or read in the novel today. Now, a group of researchers from the Carnegie Mellon University has developed a way, through which one can turn the style of one video into another; basically, alter the reality.

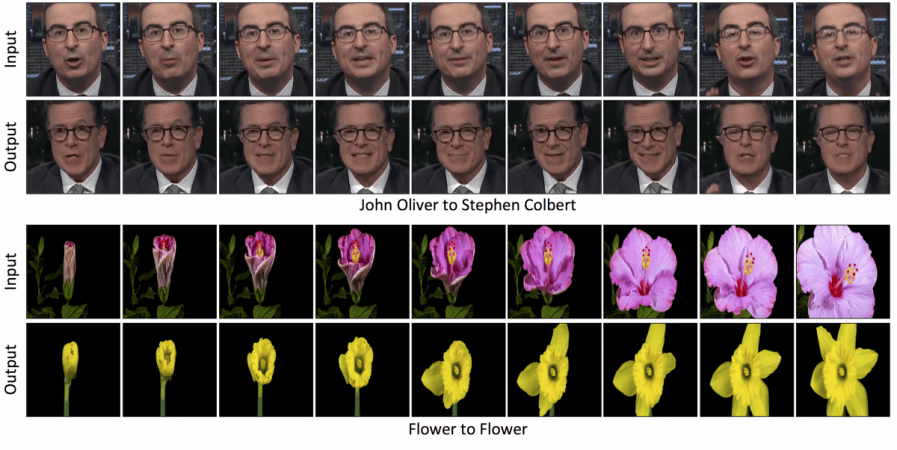

Okay; you may not understand what I am saying at first; but you definitely will, after watching the video, where researchers have taken a clip of John Oliver talking and made it look like it's Stephen Colbert.

Isn't it fascinating and scary at the same time?

So, it basically boils down to the point that this technology is capable of making anyone or anything look like they are doing something, which they, in reality, never did!

"I think there are a lot of stories to be told. It's a tool for the artist that gives them an initial model that they can then improve," said the genius mind behind this idea, a CMU Ph.D. student Aayush Bansal.

Bansal and his team devised this tool in order to make shooting complex movie scenes easier. In another work, the researchers made it look like the opening of one flower, whereas in reality, it was some other flower that was blooming.

The system makes use of something called generative adversarial networks (GANs) for changing one image's style into another without many matching data. However, we should note the point that GANs are capable of creating several artifacts, which can spoil the videos, as they are played.

In such a network GAN, the researchers created two models. One is a discriminator, which learns how it can spot the consistencies between the images or videos and the other is called a generator that learns how it can create images or videos that match a particular style. When these models work competitively, the system learns to transform the content into a particular style.

Bansal and his team have also developed a Recycle-GAN, which decreases the flaws by "not only spatial, but temporal information."

"This additional information, accounting for changes over time, further constrains the process and produces better results," the researchers wrote in their paper.

Now, although the aim of these researchers is to help the artists, it can, most definitely, be used develop so-called Deepfakes. This would let the wrong people simulate someone saying or doing something, which he/she never really did. The researchers are well aware of this problem.

"It was an eye-opener to all of us in the field that such fakes would be created and have such an impact. Finding ways to detect them will be important moving forward," said Bansal.

!['Lip lock, pressure, pyaar': Vidya Balan- Pratik Gandhi shine in non-judgmental infidelity romcom Do Aur Do Pyaar [ Review]](https://data1.ibtimes.co.in/en/full/797104/lip-lock-pressure-pyaar-vidya-balan-pratik-gandhi-shine-non-judgmental-infidelity-romcom.jpg?w=220&h=138)