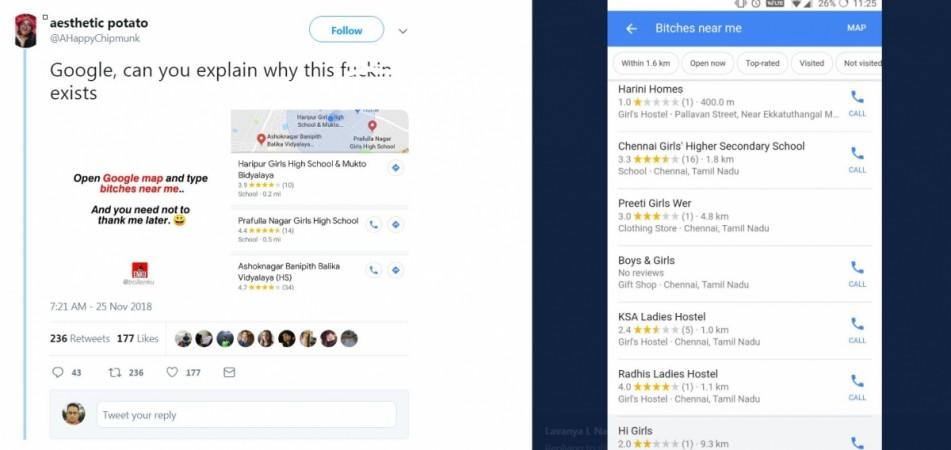

Search engine giant, Google is facing a public backlash in India over a search result on Google Maps, which tend to show the list of girl-only schools, ladies college, Women Paying Guests (PG) building addresses when people type the keywords—"Bit**** near me".

It gets worse if you type the same on Google Search app be it on mobile or a computer; it lists false dating services, which are disguised escorts services. Several people have taken to Twitter bashing Google for stereotyping the slang 'bitches' to women in its search service.

Who is to blame for this mess—Google or humans?

To be frank, both Google and people are equally responsible.

The search engine giant, earlier in the year at the I/O 2018, showcased company's Artificial Intelligence-powered 'Duplex', which mimicked the natural human conversation. Google showcased two sample communications—one, which set an appointment at the hair salon with a woman's voice and another reserve a table in a restaurant with a male voice.

It left everyone attending the event with jaws dropped in awe of the Google's technology and also led to a backlash on media, where some expressed apprehension of negative aspects of AI, claiming it might lead to job loss in call centres and others went extreme, by stating it might one day take over the planet from humans.

However, Google allayed the fears that it will, when this feature, eventually come to phones, will always make itself known to the speaker on the other side that it is Google Assistant. Also, the technology is not fully developed so much so that it can steal jobs in telemarketing firms.

However, the AI technology which makes use of machine learning algorithm that will always continue to absorb information through observing human behaviour be it how they speak, search stuff or make comments on the Internet and eventually one day, which by the way is not that far, it will be fully developed in to a humanoid or pseudo-human if you may like to call it.

Google's machine learning is akin to kids or even teenagers for that matter, who at an impressionable age, tend to copy their elders in terms of the manner of speaking or actions. Here too Google search algorithm understands the slang word- 'bitch' usually used by people on social media channels to hurl abuses at women and has wrongfully equated bitches to women.

So with machine learning, Duplex technology basically learned the tricks by close observation of human conversation, but it lacks the very important component the 'moral compass', which Google, no matter how difficult it is, should create an algorithm code for it. Only then, this service should be released for public use.

This also applies to the current search algorithm of the Google which needs immediate attention. It seems the company has not learnt any past lessons. In 2015, Google was involved in the similar fiasco, wherein if a user typed top 10 criminals in India via image search, it showed the photo of the Narendra Modi, Prime Minister of India, on top of the lists.

Going by the recent incident, it looks like Google has a long way to go in improving the search results and just don't depend on observing human behaviour in their pursuit to offer seamless information fetching and another personalised service.

Stay tuned. Follow us @IBTimesIN_Tech on Twitter and on Google News for the latest updates on Google.

![Nothing to open its first global flagship store in THIS Indian city [details]](https://data1.ibtimes.co.in/en/full/827007/nothing-open-its-first-global-flagship-store-this-indian-city-details.png?w=220&h=135)